Ramblings from an Old Scissors-cutter

by Mike Rankin, Crops and Soils Agent

Fond du Lac County – UW Extension

Getting old has its disadvantages. With each passing day there are aches and pains in new places resulting in more visits to the doctor than ever seemed possible or desired. Change becomes increasingly more difficult but necessary to keep with the times. Everything seems too expensive because it’s measured by prices of days gone by. People you remember as babies are having babies and some of those you remember as young adults are no longer with us.

Of course I’ve also found that growing old has its advantages. Perhaps at the top of the list is the ability to compare and analyze based on extensive personal experience. This of course sometimes leads to painstaking historical analysis on a topic at hand… appreciated by some, not so much by others. I cannot lend much historical perspective to topics centered on politics, the arts, or deer hunting, but I can speak extensively about alfalfa scissors-cutting. I know, it’s a meager lot in life, but somebody has to do it. For those with an interest, what follows are some of my observations and educated opinions about this right of spring that ranks up there with “pitchers and catchers report.”

In the beginning…

When I entered the extension scene in the late 1980’s forage quality testing had reached the point of being a known commodity. Near Infrared Reflectance Spectroscopy (NIRS) offered a quick, economical, and reasonably accurate assessment of forage quality. However, the idea of testing forage before, rather than after, harvest was a new concept made possible by NIRS. Further, corn silage had not reached the prominence in Wisconsin that it has today and so milk production was largely dictated by alfalfa forage quality. The single biggest problem of the day was simple… forage quality on many farms was not adequate to support the high milk production made possible by advancements in animal breeding. Two and three-cut alfalfa harvest systems were being replaced by four-cut systems. Along with this came the realization that spring alfalfa growth could be the best of the year if cut earlier and would be the worst of the year if cut late.

Educators, ag professionals, and producers jumped all over the information reported by local alfalfa scissors-cutting programs. At the time, there was no internet, so results were mailed, reported on an “Alfalfa Hotline” phone system, or sent out through the media. These efforts gained steam in the 1990’s and results were used by literally thousands of producers each year. The same is true today, but the internet has made it possible to both receive and disseminate the data in a more timely fashion. More importantly, scissors-cut programs got producers thinking about alfalfa in mid-May, usually before corn planting was done. Further, they have helped with the early identification of those years where alfalfa forage quality is unusually high or low because of abnormal temperature/moisture interactions.

The process…

Through the years I’ve taken scissors-cut samples on many beautiful spring mornings and many on not so beautiful mornings—-driving rain storms, cold winds, and once had to dig my samples out of an unexpected May snow. I’m not sure who coined the “scissors-cutting” term but it certainly has withstood the test of time. Likely, not many project coordinators even use scissors to sample alfalfa. It doesn’t matter. In recent years I’ve found a pocket knife works about as easy as any high priced cutting device. It would seem that the sampling and analysis of standing forage would be fairly straight forward; however, it became apparent early-on that there was plenty of opportunity for error as erratic results from one sampling date to the next occurred more often than anyone would have liked. Unexplainable results (e.g., forage quality getting better from one date until the next or dropping by inexplicable amounts) were often traced to factors such as:

1) sampling in different field areas,

2) sampling at different times of the day,

3) sampling at different plant heights,

4) lab workers splitting the whole plant sample resulting in a subsample that was not representative,

5) early NIRS equations that did not adequately measure components of fresh forage,

6) lab worker error, and

7) inherent error in the entire process of a small sample size representing an entire field.

Although errors still can and do exist from time to time, I’ve learned they can be minimized or overcome with some procedural adjustments (a fresh forage NIRS equation has also been developed). First, I stake-out a small (3′ x 3′) representative area that the cooperating farmer leaves uncut. All samples are taken from this area early in the morning. Further, I always cut and submit two samples from the area on each sampling date. This allows me to average the results and provides a backup if for some reason or another one of the results is erroneous. If both samples are telling me the same thing I have greater confidence in the results. I try to keep the samples relatively small to help insure the lab workers will cut and dry the entire sample. Finally, unless weather conditions are extremely good for alfalfa growth (unusually warm), I’ve found sampling more than once per week doesn’t do much good because unavoidable error is often greater than the forage quality change occurring over just two or three days.

Although errors still can and do exist from time to time, I’ve learned they can be minimized or overcome with some procedural adjustments (a fresh forage NIRS equation has also been developed). First, I stake-out a small (3′ x 3′) representative area that the cooperating farmer leaves uncut. All samples are taken from this area early in the morning. Further, I always cut and submit two samples from the area on each sampling date. This allows me to average the results and provides a backup if for some reason or another one of the results is erroneous. If both samples are telling me the same thing I have greater confidence in the results. I try to keep the samples relatively small to help insure the lab workers will cut and dry the entire sample. Finally, unless weather conditions are extremely good for alfalfa growth (unusually warm), I’ve found sampling more than once per week doesn’t do much good because unavoidable error is often greater than the forage quality change occurring over just two or three days.

Enter Albrecht et. al…

Perhaps the biggest change to the whole scissors-cutting effort came in the early to mid-1990’s with the introduction of a new system for estimating alfalfa forage quality. Dr. Ken Albrecht, University of Wisconsin Forage Researcher, and his students evaluated a number of plant based criteria to determine which ones correlated best as a forage quality predictor. Not surprisingly, plant height showed a strong relationship, and coupling it with plant maturity made it even a bit better. Albrecht released his equations describing the relationship and I quickly transferred them into a spreadsheet program to develop a usable table format that estimated relative feed value (RFV). The system, known as Predictive Equations for Alfalfa Quality (PEAQ), was widely accepted by ag professionals and received a tremendous amount of media attention. The user needed to randomly select several small areas within an alfalfa field and measure the tallest stem along with noting the most advanced stem in terms of maturity. Armed with this information, the user simply looked at the table to find the corresponding neutral detergent fiber (NDF) or RFV.

The PEAQ system was quick, easy, and there was no lab cost. For several years many extension agents reported both scissors-cut (fresh forage) analysis and PEAQ results. I recall getting numerous phone calls and having teleconference discussions centered on either which system was best or why the two approaches didn’t always give the same results. As for the latter, all of the potential errors for scissors-cutting listed earlier apply here, along with an additional list for estimating forage quality using PEAQ. Many extension agents adopted PEAQ as the only method used for reporting forage quality and still do to this day.

The PEAQ system was quick, easy, and there was no lab cost. For several years many extension agents reported both scissors-cut (fresh forage) analysis and PEAQ results. I recall getting numerous phone calls and having teleconference discussions centered on either which system was best or why the two approaches didn’t always give the same results. As for the latter, all of the potential errors for scissors-cutting listed earlier apply here, along with an additional list for estimating forage quality using PEAQ. Many extension agents adopted PEAQ as the only method used for reporting forage quality and still do to this day.

Through the years, I’ve gained some working knowledge using PEAQ as a method to estimate forage quality. First, it’s important to realize the values are based on a one-inch cutting height. Hence, cutting at a higher stubble height (as most producers do) results in a higher value than what is estimated by PEAQ. For this reason I don’t believe the PEAQ estimate for standing forage is going to be 20 to 30 RFV points higher than what is actually harvested as we initially predicted. My experience is that the two values will be pretty close unless harvest losses are considerably high. Next, I’ve learned that in every alfalfa field there are genetic mutations resulting in the occasional extremely tall stem (5-6 inches taller than every other plant). Stay away from these when making estimates or forage quality will be grossly underestimated. Further, once alfalfa begins to lodge, the precision of PEAQ will soon be lost. In some years this might be relatively early in the growth process, other years it might not happen at all. When looking for the stem with the most advanced stage, the protocol is clear that the bud or flower must be visible. Feeling a bud does not count as “bud stage.” In fact, I don’t even count “barely visible.” Finally, if you’re one of those like myself who continues to sample both fresh forage and use PEAQ, it’s likely that you’ll find the two results will be farthest apart (most often with PEAQ being the lower value) with early vegetative samples and the two methods will begin to converge as plants reach bud stage. Because PEAQ is based on a one-inch height, I like to also take my fresh plant samples at the same height.

It wasn’t too long after PEAQ’s entrance into the forage prediction game that the yard stick and spreadsheet table were replaced by the PEAQ stick. These were first made available by the former Wisconsin Forage Council and since have been manufactured by several private sector companies in addition to the Midwest Forage Association.

Replacing the “V” with a “Q”…

It’s human nature to always be in search of a better mousetrap. Relative feed value has been around since the 1970’s and served the industry well. It was developed as a forage quality index for alfalfa. Those who claim it does a poor job of describing quality in non-alfalfa forages… you’re right, but keep in mind it was never intended for that purpose (however, that didn’t stop people from doing so). Through the years we have learned that RFV has some deficiencies, even for alfalfa. Nevertheless, the utility of the index deserves a lot of credit for the advancement in making quality alfalfa forage over the past 30 years.

Several years ago, Wisconsin researchers developed a new mousetrap… Relative Forage Quality (RFQ). Without going into detail, the term better predicted how forage would “feed.” Further, the index worked across most forage types. Here is an important point to keep in mind… RFV is NOT RFQ. Hence, the initial move to simply change PEAQ sticks from an RFV reading to a RFQ reading was a bit premature. The PEAQ equations were developed to predict ADF and NDF, the components needed to calculate RFV. Relative forage quality correlates to RFV, but it is not the same and predicting RFQ from RFV will often result in erroneous values for specific situations. Knowing that many factors impact fiber digestibility tells us that any simple prediction equation will only result in a gross estimation. My two suggestions are these:

1) report PEAQ results for what they are… RFV, and

2) if you want to know RFQ, spend some money to test the forage.

Through the years, perhaps one of the hardest lessons I’ve had to learn as an educator is when to simplify and when to not simplify. Speaking from experience, either approach can get you in trouble if used incorrectly.

The big picture…

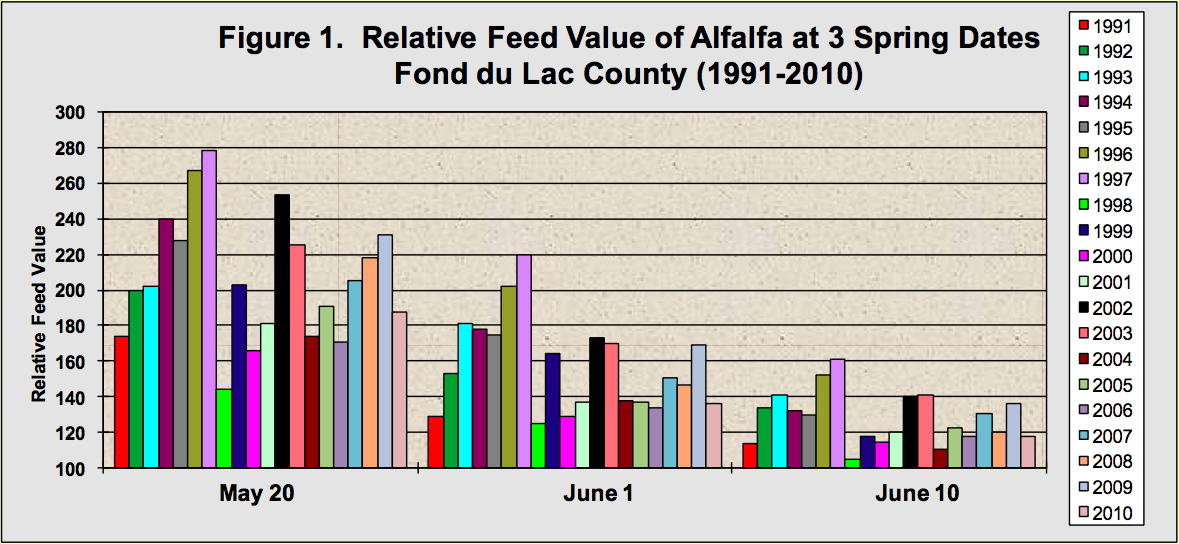

It’s always important to analyze and strive for future improvement. However, it’s equally important to look back and see if the collective efforts of many have resulted in change for the better. Twenty years ago there was a pretty good consensus that average to poor forage quality was a major limitation on many dairy farms. Scissors-cutting and the development of the PEAQ equations were not solely responsible for changing that trend, but I firmly believe they played a large role in putting the focus directly on improving quality of harvested alfalfa. No longer is calendar date or “first flower” used as a criterion for optimum harvest time. One of the things I’ve continued to do is map RFV on May 20th, June 1st, and June 10th for each year. The results are presented in Figure 1. Clearly, every year provides a new set of opportunities and challenges. On average, alfalfa RFV drops about 4 units per day, but that may or may not be the case this year, next year, or the year after that. Simplifying biological systems founded on temperature and moisture is a slippery slope. Although it may be true that sampling a few fields does not adequately describe every field, such an effort has always helped to eliminate what I like to refer to as “forage quality train wrecks” because we simply didn’t know.

The pendulum…

So where do we go from here? Alfalfa forage quality is still an important educational focus, but much less so than twenty years ago. High quality forage is now a mainstream concept on most farms. Having access to pre-harvest forage quality information has become an expectation in many areas. Another major change has been a much higher reliance on corn silage as a dairy cow diet component. Although this doesn’t significantly change the need for high quality alfalfa, it probably tempers the impact of somewhat lower quality forage on the dairy diet. Even so, I’ve been surprised by the results of our Wisconsin Alfalfa Yield and Persistence Project over the past few years from the standpoint of the extremely high forage quality being documented over the course of the growing season and, for that matter, life of the stand. In some cases average RFQ values are in the 180 to 200 range! Great quality forage… yes… but now the question becomes whether or not some producers have swung the pendulum too far at the expense of significant dry matter yield loss (twenty years ago I never would have predicted making this statement). The biggest “knock” on alfalfa over the years (and certainly today) is that yields cannot compete with corn silage. As a rule of thumb, I figure there is about 0.25 ton increase and 20 point RFV decrease for every five day delay in harvesting first-cutting. Hence, the swing from 200 RFV down to 160 RFV is a gain of 0.5 tons per acre. That’s significant! Although a lot has changed in the past twenty years, the alfalfa yield – quality tradeoff decision remains alive and well. It’s why we will still be talking about, researching, harvesting, and scissors-cutting alfalfa twenty years from now.